A short story about how misguided engineering perfectionism seriously handicapped our Search Engine presence and made us invisible online.

Your worst enemy is yourself – no, really. As an engineer, you will put too much faith in making “smart” choices.

For a long time, I had a very wrong idea of what perfectionism meant. I thought, and am embarrassed to admit, that a perfectionist strives for perfection and eventually achieves it.

Meanwhile, perfectionism is a personality trait that involves setting excessively high standards and being overly critical of oneself. Perfectionism can lead to various adverse outcomes, such as stress, burnout, anxiety, depression, and workaholism.

If this sounds familiar, you are not alone. That sounds like me at an early stage of building Wide Angle Analytics.

I am very proud of the final product, which is highly scalable, with loads of automation allowing a tiny crew to operate it with confidence. But on the journey here, I made a ton of mistakes. The most jarring example is the Wide Angle Analytics landing page and its evolution.

And I am not talking about design. I thoroughly appreciated the various levels of feedback. I was learning trial-by-fire how to build an appealing, informative and converting website. Being a backend engineer for most of my career, this has taken me a while. Still, I was fully aware of my limitations. After 5 iterations, I am genuinely proud of how it looks. I feel no shame in showing it to my customers and more design-inclined peers.

The mistakes were more subtle than visual designs.

Level Hard: Start Like You Care Too Much

I will be talking only about the front-end side of Wide Angle Analytics. In the backend, I was always confident. Having been building data-intensive applications for nearly two decades, I had a great experience creating the right thing on the first go.

The front end was where I made some serious mistakes.

The project started with some fundamental assumptions:

- Make landing and marketing pages share the codebase with the application for simplicity (d’oh).

- Separate content from the application to enable focusing on content.

- Focus on launching, and avoid premature optimization.

These sound reasonable initially, so let’s dive into each and see what went wrong.

One Codebase for the Application and Marketing Pages

You will often see SaaS applications serve marketing content on example.com. Once you log in, you are redirected to app.example.com or similar. If you squint, you can sometimes see a slight difference in the design language between the two.

Marketing and engineering teams typically move at different paces, so these differences are bound to happen. Even if the company has a mature design system.

Being a solopreneur and indie maker, I didn’t have the luxury of maintaining two code bases. And I wanted everything to be consistent (damn you, perfectionism).

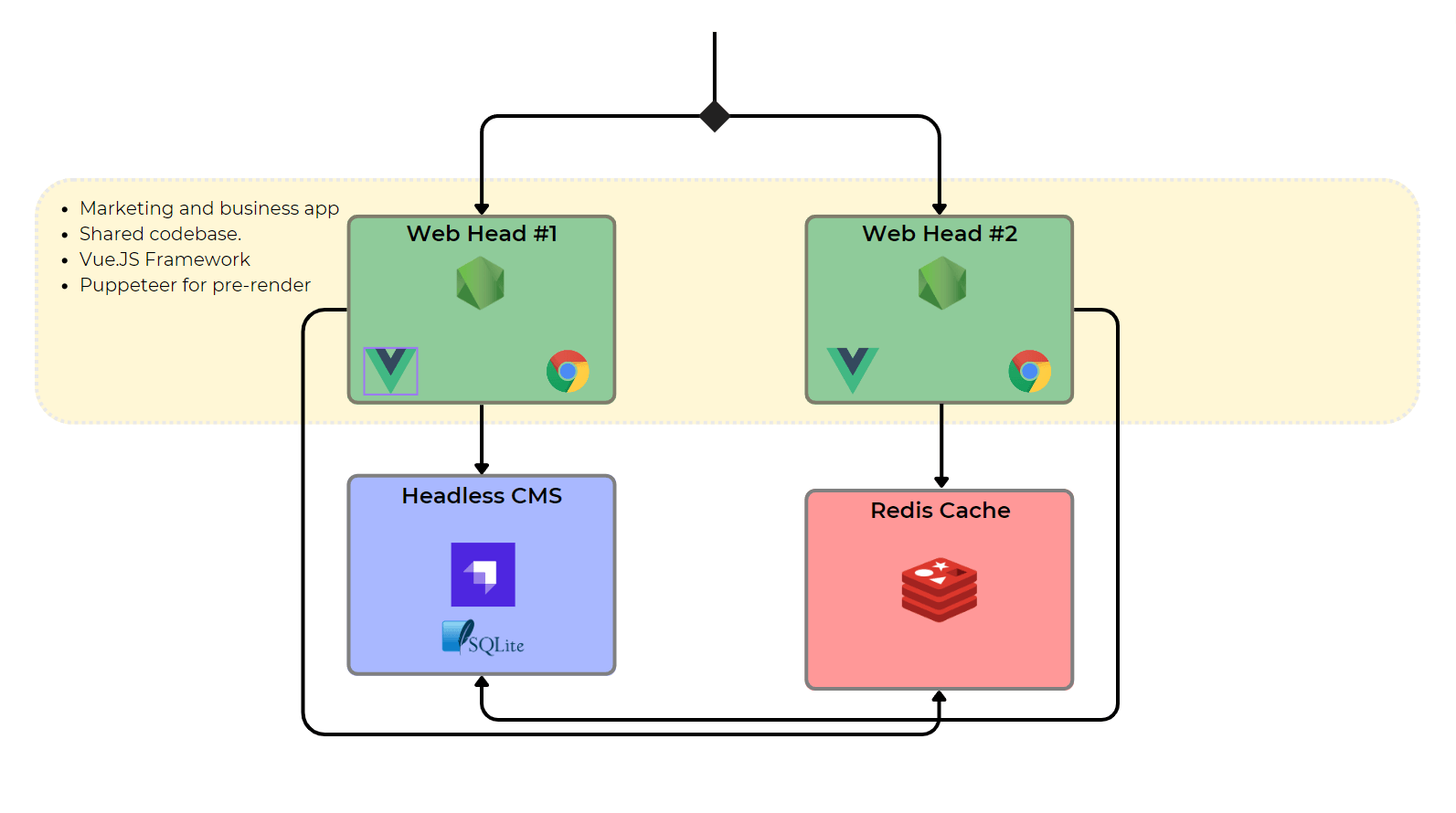

I settled on building the 1st version of Wide Angle Analytics in Vue.js with the internal components’ library. It felt good to sprinkle it with TailwindCSS, consistent colours captured in variables, and site-wide typography.

It felt magical to change the paragraph’s font and reflect it in all the marketing copy, blog, and application modal boxes. Everything indicated that this was the right choice.

An added benefit was a single deployment artefact. Deploy one web application, running in node with Nginx reverse proxy, exposed as a service via Kubernetes Ingress. Easy.

Initially, this approach felt really solid. No apparent issues.

I was not very concerned with performance at first. Despite that, I was occasionally was checking the site loading times. Lighthouse measurements and scores of roughly 70-80 were making me feel good.

Separate Content Authoring from the Application

The first cracks started showing when I decided to add a blog. The ease of creating content was my main focus.

After hacking a batch of documentation and blog articles in pure HTML inside Vue templates, I quickly realized this won’t scale, and I need a better solution.

I turned my attention to the headless CMS. I struck gold. I combined Strapi CMS with Vue components and leverage dynamic content with advanced routing. Easy authoring and consistent rendering has been achieved.

It was fast too. Sneak peek at Lighthouse, and everyone was happy with a score of around 80.

The added cost of running Strapi was an additional deployment service. With Kubernetes, it was straightforward and felt like a non-issue. Combined with the initial low traffic volume, I decided I would get away with the SQLite database hosted on the attached volume. At least until I reach the scale and traffic to warrant an external database.

Small project, one YAML configuration for Kubernetes and Ingress setting. The Content Service was up and running in no time.

Still feeling good about myself. 🥸

Get it out, and optimize it later

Ask the Indie Hacker community, and in unison, everyone will shout that you should avoid an early-stage optimization. The goal is to launch fast and validate your idea.

So I did. My experience told me that the backend was in great shape, and performance tests were spitting formidable numbers. The scaling infrastructure of OVH provided the product with a significant leeway. Wide Angle was ready for customers.

It was time to launch the front end and herald the arrival of the new web analytics to the world.

For the human who visited the website, the experience was fast and snappy. The Lighthouse was reporting good numbers. It wasn’t the highest possible number, but I wasn’t too worried about it.

Indie makers often point to giant, corporate behemoths and their slow, ageing websites and say how they make billions, and hence you shouldn’t fret about yours.

So I didn’t. And Wide Angle Analytics was launched.

Here come the crickets 🦗

I wasn’t naive. Launching SaaS products in the 2020s is not that easy. The adage “Build it, and they will come” no longer holds.

As the product was built, I saw numerous other web analytics products enter the market. I was not fussed too much about it at first. Most of the competition took a naive and often misguided approach to compliance, failing at some simple criteria.

Having invested in compliance infrastructure, scrutinizing every data processor in my supply chain and hiring DPO early, I was ahead of the curve of more established products.

But the message needed to go through.

The initial reaction was to point the blame finger at the copy of the landing page. As an engineer launching the first product-driven business, I was learning the concept of good copy on the living organism. Thanks to an external consultant and a wee bit of time, the copy was getting better.

Sadly, traffic did not follow.

After a few iterations on a copy, intertwined with product changes resulting in initial customer feedback, I finally looked at SEO.

What I found was pure horror. A gut-wrenching feeling took over.

Crippling SEO failures

The Google Search Console and Bing Webmaster tools were consistent. They crawled the website but outright refused to index our content.

Feedback coming from Bing was far more actionable. It helped me fix major offenders like missing, too short or too-long meta tags. But more work was needed. There were more serious structural issues.

With limited time and resources, I focused on work that promised the best return on investment. Hence, I focused solely on fixing SEO for Googlebot.

The Root Cause

In this write-up, I will skip the issue of providing quality content. Not because it is not necessary – it very much is, but because our technical solution was the necessary condition for the already published content to get noticed.

Google crawler will happily understand JavaScript generated web pages. I am talking about the classic SPA, one you can create with a pure framework like Vue.js.

Bing bot, not so much.

But what is interesting is that the Googlebot will tank your website ranking if your indexable content is generated by client-side JavaScript.

Our goal number one: serve crawlers with static HTML pages.

Untangling Content Handling – The Monster is Born

How do you add statically generated pages in Vue.js app? Not that easy, it turns out. Awesome Content plugin and Renderer are part of Nuxt. When we embarked on this task, Wide Angle used Vue3, while Nuxt was still on Vue2. There was no easy way to use these modules across frameworks.

Tough luck. Okay, so we can’t use Content to handle Markdown, nor can we use Nuxt’s pre-render.

But we already have content in Markdown, hosted in Strapi. So, how do we turn it into static HTML?

The Monster Is Born

I added a Node middleware in the web application code that used Puppeteer. Puppeteer is a fantastic toolkit that allows you to run a headless Chrome browser and capture rendered websites as if presented to the end user.

“We will pre-render pages on the fly” – I thought.

The middleware was inspecting user agents. For known bots, such as Google Bot, rather than serving Vue assets, it would trigger the Puppeteer to capture and serve the output to the bot.

To avoid hogging resources each time, the results were cached in Redis.

Doesn’t sound horrible at first. Until you consider the following:

- crawler traffic is not predictable and very bursty,

- Puppeteer is very resource intensive, and

- that this system uses four services to generate a single HTML page.

After putting this into practice, the first controlled runs with the Ahrefs bot, with JavaScript disabled, were somewhat optimistic. However, the situation remained difficult after a few days and multiple crawler visits triggered by re-indexing request.

I was puzzled. Pure HTML content was served fast, with a high score in Lighthouse. Why didn’t it work? The answer was hidden in the logs, showing sporadic but noticeable timeouts and failures.

As I mentioned earlier, the crawler can generate burst traffic. Spawning multiple Chrome instances eventually drained memory on the node and ended up with broken content being cached and subsequently served for a few hours.

This could have been fixed by changing the crawling rate in robots.txt like this:

User-Agent: Googlebot

Crawl-delay: 5

However, at this point, it was clear this was becoming ridiculous. Running two pods, each with 2 GB of RAM, one more for headless CMS, and a 4th container for cache, just to serve static pages was unwise, to say the least.

Untangling Content Handling – Kill the complexity

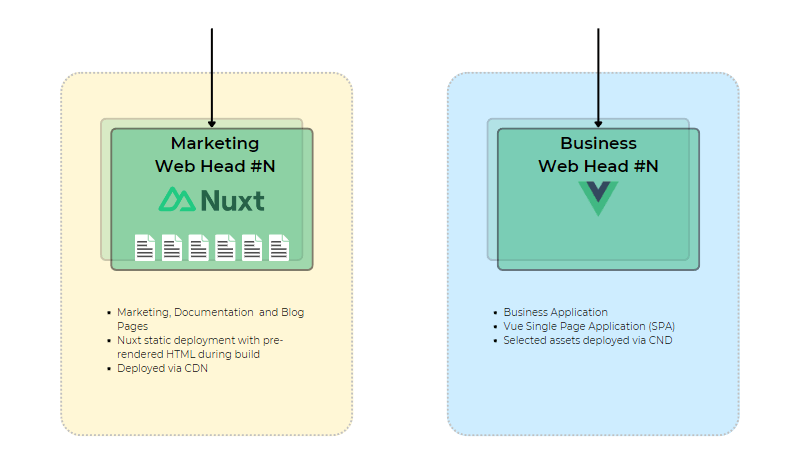

Enough is enough, I thought. More often, you just won’t outsmart the industry, and I decided to separate the marketing site from the application code.

I moved all the landing and marketing pages, documentation, and blog to Nuxt. The current website leverages the Content module for Markdown processing. Because it lacks application complexity, it is a pure static page.

We took this opportunity to redesign parts of the landing page while making the migration, so the comparison may not be exactly apples-to-apples. However, looking at the SEO of previously published blog articles, adopting static content profoundly impacted how search engines perceived our content.

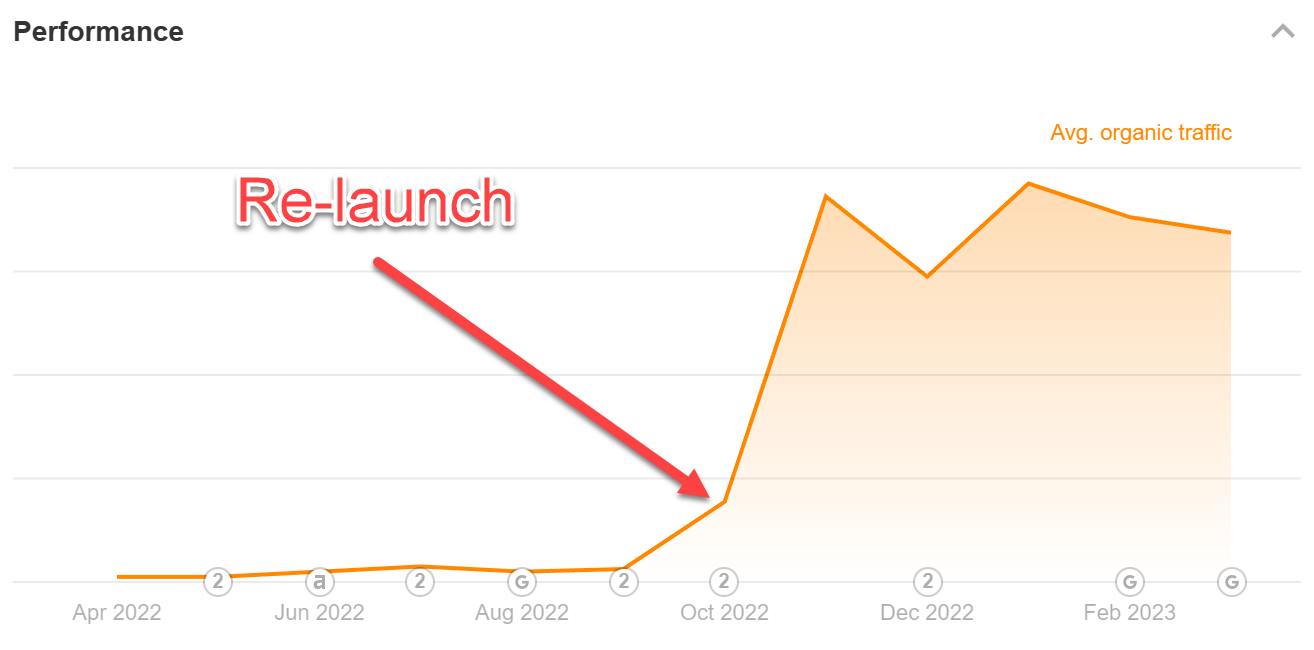

We re-launched in the early October and the impact was visible almost instantly.

In the process of simplification, I scrapped four pods, which required close to 8 GB of RAM and non-trivial compute power. These have been replaced with just two tiny containers acting as static content serves, together maxing at 512 MB of reserved RAM and fractional CPU resources.

It wasn’t a problem of Kubernetes, despite what DHH and his pundits promote these days. Kubernetes allowed reshaping architecture and experimenting with ease and a great deal of flexibility.

Does it have to be perfect?

Wide Angle Analytics marketing site is currently after the 5th major redesign, with multiple smaller tweaks along the way.

And while it isn’t perfect, it is much easier to read, use, and approach for users and bots than it was until October 2022.

I mention this because getting things right early was necessary in this case. Taking a lesson from the Indie Makers handbook, I dismissed certain smaller issues, such as Time to Interactive metric, thinking that it was unimportant since that hugely successful corporation XYZ has a website that looks made with Geocities.

But this narrative has a major flaw. You can get away with A LOT if you are an established business with a large customer base and a long tenure on the Internet. If you are the new kid on the block, you better be top-notch, or they will eat you alive.

It pays off to be a perfectionist. But it matters where you focus your efforts.

Lessons learned

To get to the point where we are today, with a growing audience and readership of our very active blog, I learned a few things. Sadly, the hard way.

Consider the benefits of revolutionary change vs evolutionary improvement. Suppose evolution requires multiple steps over a period of time, and time is of the essence. In that case, it’s worth starting a revolution and making a few heads roll. In my case, cutting the app from the marketing site and using a purpose-built framework like Nuxt would have saved a ton of grief.

It is easy to lose perspective in the fog of war. When I took a step back and looked at four containers with 8 GB in total, I knew I had created a monster. A monster doing nothing but spitting HTML. It was ridiculous. Obviously, back then, it felt natural. Just one more improvement, one more and one more.

Scrutinize industry trends. Not because they are wrong. But because you may have misunderstood them. Adding a cache at a later stage, when it is needed, is a good example of optimization. In this case, early optimization for the sake of content would make the caching unnecessary in the future.

Closing thoughts

As I proofread this article, I can appreciate that the problems and solutions proposed might seem trivial to some.

It is interesting how, when you are in the thick of it, handling taxes, vendors, contractors, compliance and predatory competition, you can easily lose sight of the next best logical step.

Stay safe. Stay critical.

No Cookie Banners. Resilient against AdBlockers.

Try Wide Angle Analytics!