Introduction

Privacy is a fundamental right to human dignity and integrity, to one’s honour and reputation. Protecting privacy is key to ensuring human dignity, safety and self-determination. It allows individuals to freely develop their own personality.

I have always believed that not only is privacy a fundamental human right, but the basis of all other rights. Without respect for individual, and collective privacy, society can not function. These self evident truths have been hardwired into the human psyche since the beginning of agriculture some 10 thousand years ago. It is through this seemingly instinct driven belief that early societies were able to function and prosper.

Privacy was so fundamental to the development of humanity, that almost all religious moral traditions strongly protected the right to individual privacy. For example, the Christian moral tradition provides a solid foundation for the right to privacy by linking it to the act of communication and information dissemination, a fundamentally relational activity oriented toward both the personal and common good. 1

Looking at Islam,we see that Islam also gives great importance to privacy. This is evident from some of the verses of the Holy Quran: ‘Do not spy on one another’ (49:12); ‘Do not enter any houses except your own homes unless you are sure of their occupants’ consent’ (24:27) 2

With overwhelming evidence to support the intrinsic value of privacy within the arc of human social development, it makes for a dazzling, yet sinister contrast with the trajectory of recent advances in surveillance and reconnaissance technology as we shall explore in this article.

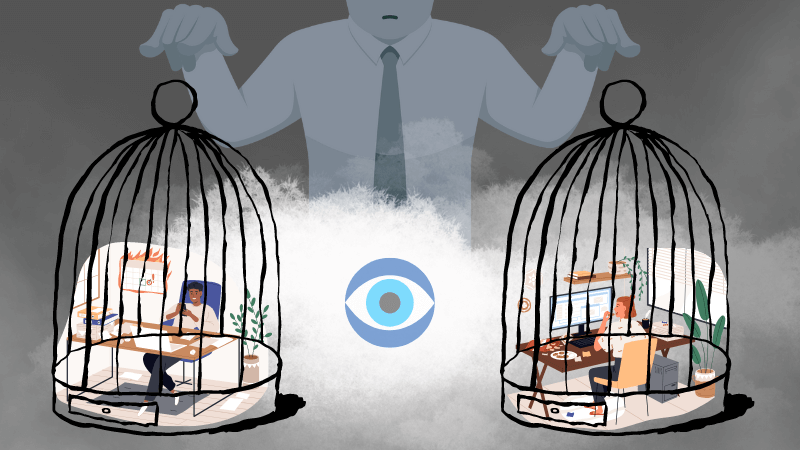

The ability of each one of us to exercise their right to privacy is becoming increasingly tenuous in today’s world. Enabled by technology, governments, institutions and corporations are slowly increasing the difficulty at which one can attain privacy.

The rapid advancements of the internet era have created both the means and opportunity for employers to survey, and spy on their employees. The Covid-19 pandemic, with millions working from home, has only accelerated the adoption and even acceptance of surveillance in our societies. Tapping into our reptilian fear centre, and using public safety, productivity etc… as pretext, governments, corporations and institutions across the world have increased surveillance and control across the workforce and society.

The astonishing speed at which we have seen the repurposing of machine learning, facial recognition, malware and AI technologies towards employee surveillance and social control is nothing short of alarming. These technologies are continuing to enable and drive further development of dystopian tech such as the Social Credit System, and Bossware.

What is Bossware?

Bossware encompasses any type of employee productivity tracking or performance monitoring software. The name comes from the fact that typically this is something your supervisor (boss) would put in place to monitor you. In certain circumstances it is referred to as “spyware” because it can monitor your digital activities even when you are at home. 3

The Covid-19 pandemic fundamentally changed the nature of work for many, and millions have enjoyed working from home. The pandemic seems to have instigated a seismic shift in how people value their time and how they perceive their relationship with work. Recent movements such as Bailan (let it rot) in China and Quiet Quitting in the west have encapsulated this radical shift in perception. Post pandemic, many have seemingly internalised the fleetingness of life, and are re-prioritizing their life goals, and reassessing their relationship with and their need for material goods.

These thinking patterns in our societies, although currently more widespread and known, are not new. Henry David Thoreau, in his best known book, Walden, questioned the premise of being defined by, and dedicating our lives to work. He wondered if by reducing our wants, we can also reduce the amount of work required to support a simpler lifestyle.

As more and more companies had little choice but to allow their employees to work remotely, managers started being concerned about employee productivity. Indeed many large and well known companies such as Tesla and Apple, began trying to force employees back to the office. Employees however, continued to push back vigorously against attempts to force them back to the office, up to and including quitting their position to find a more flexible role. This combined with a tight labour market pushed many managers to look at new options to monitor their employee productivity remotely.

A recent interview with Gartner, revealed that before the pandemic only around 10% of companies said they used this type of software. But since the start of the pandemic, this number has jumped to about 30%. 4

An entire industry of “Bossware” sprung up seemingly overnight. To be sure, there have been employee monitoring tools long before the pandemic, but the scale,choice, complexity and insidiousness of the post pandemic spy software has exceeded what many of us could have imagined.

I have categorised these tools into four overlapping categories. Prediction and flagging tools, biometric and health data collection tools, remote monitoring tools, and in my opinion the most potential harm, gamification and algorithmic management tools.

Prediction and flagging tools

These are tools that try to predict and flag certain undesired employee behaviours. Specifically these aim to identify or deter potential fraud or “rule breaking”. Often marketed as advanced management tools, they can provide a foundation for discriminatory practices in workplace evaluations and categorise and label employees with risk categories based on patterns of behaviour.

Although older surveillance tools such as CCTV cameras provided similar, albeit basic capabilities, this automation of surveillance data collection and review has improved the ability of employers to report and classify employees into potential internal threat categories that can be measured through risk scores.

These tools are usually deployed through the employer’s IT systems, this includes monitoring and surveillance software used to record computer activities like keystrokes, browsing histories, file downloads, and email exchanges. These tools, in most cases don’t provide access for direct manager monitoring but are rather fully automated software programmed to flag specific behavioural or data patterns. Some surveillance products, for instance, claim to identify when an employee is distracted and not working efficiently.5

Risk assessment is commonly used by human resource departments in the hiring process, both in sourcing and candidate selection. Previous risk assessment formats, such as background checks and criminal records checks, typically identify candidates based on less defined categories. However with newer technology the risk assessment is taken to a whole new level. Some recruitment technology companies, for example, specialise in analysing job seekers’ speech and facial expressions in video interviews.6 Aided by AI, this analysis data is then correlated with the applicants’ social media accounts and other online activities and an “employability score” is generated.7 These tools are not always for tracking efficiency, but will also flag “suspicious activity” aimed at protecting intellectual property or preventing data breaches. These tools create the potential for abuse and raise issues of accuracy, unfair profiling and discrimination.

Remote monitoring tools

These are tools, used to manage employees, monitor their activities and measure their performance remotely. Organisations use these to decentralise and facilitate remote work, while still being able to surveil and control the workers as if they were on premises.

There is currently no limit, legal nor practical, to the amount or type of information a company can remotely gather, store and analyse about their employees. Everything from logging individual keystrokes, to using the webcam to take snapshots of the employee and their surroundings. From realtime tracking of employee movements via GPS data on your company provided phone, to recording of conversations. There should be zero expectation of privacy on employer owned devices.

This unlimited collection of data by corporations, begs some serious concerns about the safety, and ownership of this data. As we have witnessed in recent years, no organisation no matter how large or sophisticated, is immune from data breaches and data theft. The more data employers collect about employees, the larger the potential for harm it creates following a breach, a data-sharing arrangement, or an outright sale.

This type of software, including apps like Prodoscore, or Hubstaff, aggregate data into what they call “productivity scores”8 that could be tied to bonus metrics or punitive measures. Whether this occurs overtly or covertly. A recent report published by the University of California Berkeley Labour Centre, calls out these sorts of scores as dehumanising, because they remove basic autonomy and dignity at the workplace.9

Health and biometric data collection

Workers’ biometric and health data can be collected through tools like wearables, fitness tracking apps. Other kinds include timekeeping systems that are activated via the employee’s fingerprint, which can also be used for digitally tracking work shifts. These advanced time-tracking techniques can generate itemised records of work activities, which potentially may be used to facilitate employee wage theft by the employer, or allow employers to tighten the definition of what counts as paid time.

The problem with employers having access to these kinds of sensitive health data, is twofold. In most jurisdictions, there is no transparency or requirement for transparency from the employer to divulge what kind of data and when this data was collected about an employee and for what purpose.

There is nothing stopping the employer from collecting data 24/7 and collecting whatever data the hardware permits. On the other fold, biometric data collected by said employer can then be used to track non-work-related activities and information. Which then can be potentially used to classify, categorise, and score employees according to their health data. This infringes on the boundaries of worker privacy, creates multiple paths for discrimination, and raises serious questions about consent and workers’ ability to opt out of tracking.

Gamification, and algorithmic management

Surveillance is essential for algorithmic management, this is when data collection is used to feed automatic decision-making algorithms. Other uses include automatic scheduling and optimization, employee reviews, and other decisions about employees. For instance, Uber the ridehail service, collects both individual and aggregate driver data through their phones, including locations, accelerations, working hours, even brake patterns.10 Other companies such as TikTok, Facebook and Google rely on engagement algorithms to influence user behaviour, and opinions without consent or knowledge. The data teams working at these companies will use these algorithms to identify what users watch, listen to, their follows and followers, whether they interacted with or commented on a piece of content, what they bought or what movie they recently watched, and much more.

Under some conditions, these management algorithms can assist managers by automating certain actions, such as sending workers automated pokes or modifying performance criteria on the basis of an employee’s real-time data.

A more surreptitious aspect of these technologies is gamification. Gamification converts work tasks into a competition, driven by performance stats. This taps into the brain’s dopamine reward centre, thus allowing for the game-like aspect. The concern with these practices is that they will create a work environment that pressures their employees to meet exacting and often unrealistic efficiency benchmarks.

This unbridled growth in surveillance, and data collection combined with gamification, machine learning and algorithmic management, has dramatically tipped the scales in the power balance between employer, and employee. The motives and outcomes of workplace monitoring have become increasingly opaque and invasive. The lack of visibility by employees into the inner workings of these tools, creates an untenable conflict of interest between the employee’s strive to succeed and the employer’s need to maintain their information advantage.

Social credit system and your social score

The social credit system is a program devised by the authoritarian Communist Party of China for one purpose. Social control. Utilising advanced software and hardware enabling facial recognition, AI boosted video surveillance, and massive amounts of data on all aspects of a Chinese citizen’s life. These are then combined, correlated and analysed by a centralised AI system to produce a “Social Credit” for each citizen.

This social score is then used by the Chinese state to reward positive social behaviours and punish undesired social behaviours. That score can restrict the ability of individuals to take actions such as purchasing plane tickets, acquiring property or taking loans.11 It doesn’t take a data scientist to imagine the catastrophic implications to free people everywhere.

Increasingly, we are witnessing small incremental steps towards the implementation of a similar system in the west as well. The pandemic laid bare the fragility of the rights we have come to take for granted. Such as freedom of speech and expression. While in China the social credit system is driven primarily by the state. In the west, it’s being increasingly championed by woke corporations who are asserting their influence into the social discourse in western societies. The ramifications of this corporate driven social credit system under construction are massive. The same companies that can track your activities and give you corporate rewards for compliant behaviour could utilise their powers to block transactions, add surcharges or restrict the use of their products to anyone they deem as undesirable.12

Examples are plentiful and include the media’s (social and traditional) large-scale suppression of ideas, speech or thoughts that, in their assessment, did not align with the mainstream narrative being championed by the state.

Another example that I personally was witness to, is The Government of Canada’s use of the Emergency Measures Act read martial law, to suppress, demonise and dehumanise people who were expressing their legitimate concerns and frustrations. It went as far as monitoring individual donations to certain undesired causes, and then using that information to seize people’s bank accounts.13

Our future could become evermore aligned with unfree societies. In 2021, the British government announced its own version of a health social credit system.14 Considering the growth of algorithms and our ever increasing dependence on products from tech giants, the ability to track, censor and eventually punish ordinary citizens will become normalised over time.

Conclusion

Privacy and employee advocates are pushing back against the power imbalance that these technologies create. They are questioning the accuracy, and fairness of the collected data at the lowest level.

In many cases, employees are unaware they are being spied on, while vast amounts of data is being collected about them. At the same time, there is no easy way for the worker to peer into the data, and scrutinise it for accuracy or fairness. Questions about the retention, use or sale of said data remain unanswered. Furthermore, employers may use this information to discriminate against certain individuals or groups of people based on race, gender, age, disability status, religion, sexual orientation, vaccination status or political affiliation.

It is clear that we, as a society that values freedom and privacy, must valiantly resist and reverse this abuse of technology towards social control and suppression. Workplace protections must also be strengthened to address the information inequality, biases, accuracy, and shifts in power that we are witnessing. We must find solutions that allow for the balancing of the economic interests of corporations with the privacy interest of the individual.

Privacy and freedom are the two pillars upon which our civilization was built. You can not have one without the other. This concept of individual freedom/privacy lies at the core of our liberal societies. Millions of people fought, and died over many centuries to bequeath upon us this legacy of individual liberty. It is said that history is not a straight and wandering road, but a circle that repeats itself with new characteristics. Therefore it behoves us to take lessons from history and to collectively and individually question, resist, and counter these attacks eroding the very foundation of our civilization. We must exert every effort to forestall a replay of the horrors of the past.

In the next instalment of this 2 part series we will discuss, compare and analyse the legal and ethical perspectives on the topic.

Footnotes

- https://www.thepublicdiscourse.com/2020/02/59611/ ↩

- https://www.tandfonline.com/doi/abs/10.1080/13600830701532043 ↩

- https://www.cloudavize.com/what-is-bossware/ ↩

- https://www.nytimes.com/wirecutter/blog/how-your-boss-can-spy-on-you/ ↩

- https://www.scientificamerican.com/article/your-boss-wants-to-spy-on-your-inner-feelings/ ↩

- https://www.pbs.org/newshour/economy/making-sense/how-using-facial-recognition-in-job-interviews-could-reinforce-inequality ↩

- https://www.washingtonpost.com/technology/2019/10/22/ai-hiring-face-scanning-algorithm-increasingly-decides-whether-you-deserve-job/ ↩

- https://hubstaff.com/calculate-productivity ↩

- https://laborcenter.berkeley.edu/wp-content/uploads/2021/11/Data-and-Algorithms-at-Work.pdf ↩

- https://www.bloomberg.com/news/articles/2019-08-22/why-uber-drivers-are-fighting-for-their-data ↩

- https://www.businessinsider.com/china-social-credit-system-punishments-and-rewards-explained-2018-4 ↩

- https://thehill.com/opinion/finance/565860-coming-soon-americas-own-social-credit-system/ ↩

- https://www.bbc.com/news/world-us-canada-60383385 ↩

- https://www.spectator.co.uk/article/we-need-to-act-now-to-block-britain-s-social-credit-system ↩