“Data-processing systems are designed to serve man,” reads the somewhat outdated opening paragraph of the Data Protection Directive, the predecessor to the EU’s General Data Protection Regulation (GDPR).

Such systems must respect people’s “fundamental rights and freedoms, notably the right to privacy,” the law’s preamble continues.

Yet in the 28 years since that law passed, and the five since it was repealed, “data processing systems”—including computers, phones, apps, websites, and servers—were used to collect more and more personal data, with little respect for people’s privacy.

But the tide is turning. Consumers are paying closer attention to how businesses treat information about them. Governments, legislatures, and even tech companies are imposing stricter rules to reflect those concerns.

There are strong ethical reasons to respect people’s privacy. But here are three reasons that taking privacy seriously—now—also makes good business sense.

New Laws Threaten Intrusive Business Models

On 15 August, the Consumer Data Industry Association (CDIA) published a website, NoToSB362.org, imploring Californians to vote against the “California Delete Act”—a law that the industry body claims will “destroy California’s data-driven economy.”

“Data fuels California’s economy and delivers benefits to its residents every day,” the CDIA claims. “Hundreds of data providers—also known as data brokers—offer a tremendous range of beneficial services to companies, non-profits, government agencies, and academic institutions.”

The global value of personal data—information about almost every aspect of people’s lives, including their beliefs, health, and precise location—is hard to measure, but it is likely in the trillions of dollars.

Millions of businesses play a role in the information industry, whether by sharing data about their customers or paying to target them with ads.

But legislators in California and beyond have decided that these practices require much stricter regulation.

A Fast-Developing Legal Landscape

Since the EU GDPR took effect in 2018, the global data protection landscape has transformed.

Twelve US states now have cross-sectoral privacy laws, seven of which passed in 2023. While less comprehensive than the GDPR, most of these laws offer individuals some meaningful control of their personal data—and all include restrictions on targeted advertising.

Beyond the US, large economies such as Brazil, Japan, and China have either adopted or amended strong privacy and data protection laws in the past five years.

After many years of debate and delay, India’s Digital Personal Data Protection Act passed this August, providing the country’s 1.4 billion people with new rights and imposing sweeping new obligations on businesses.

These overlapping and sometimes conflicting rulesets have created a complex compliance environment—the sort of regime previously reserved for tightly-regulated industries or international tax and HR rules.

But businesses that integrate privacy into the core of their operations will find it much easier to adapt to this new landscape.

Regulators Are Getting Tougher

The run-up to the GDPR enforcement deadline saw a flurry of compliance activities among businesses worldwide.

While most of the regulation’s rules and principles were not new, many companies were concerned about the GDPR’s substantially increased maximum fines.

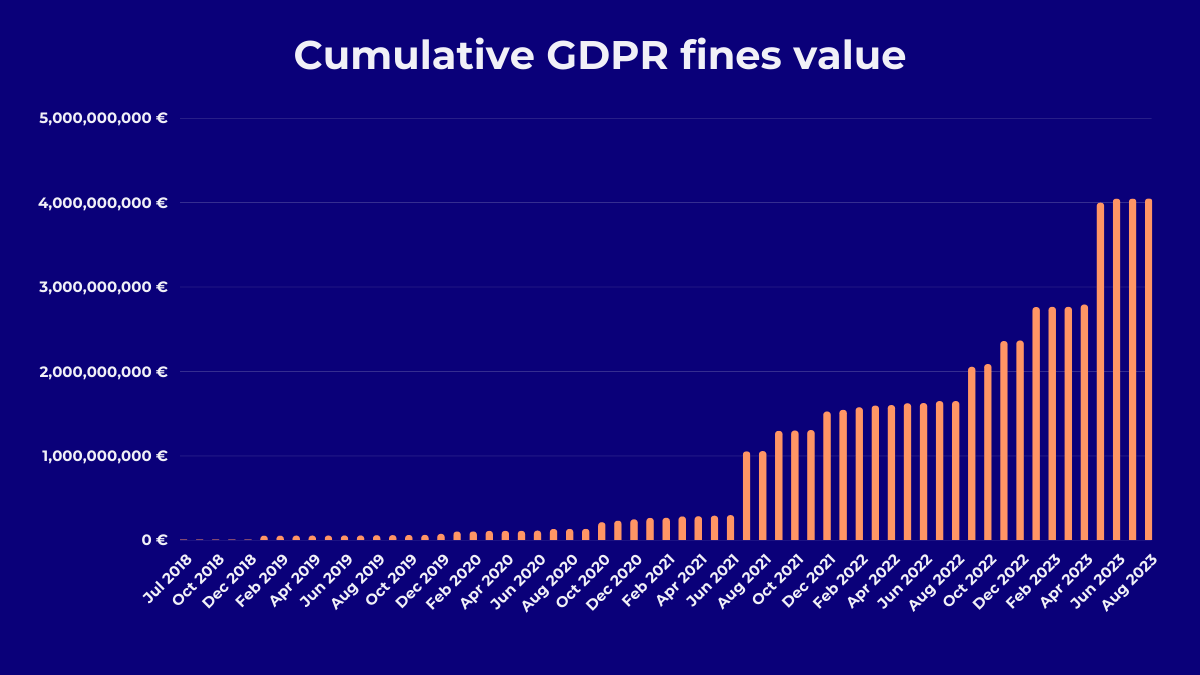

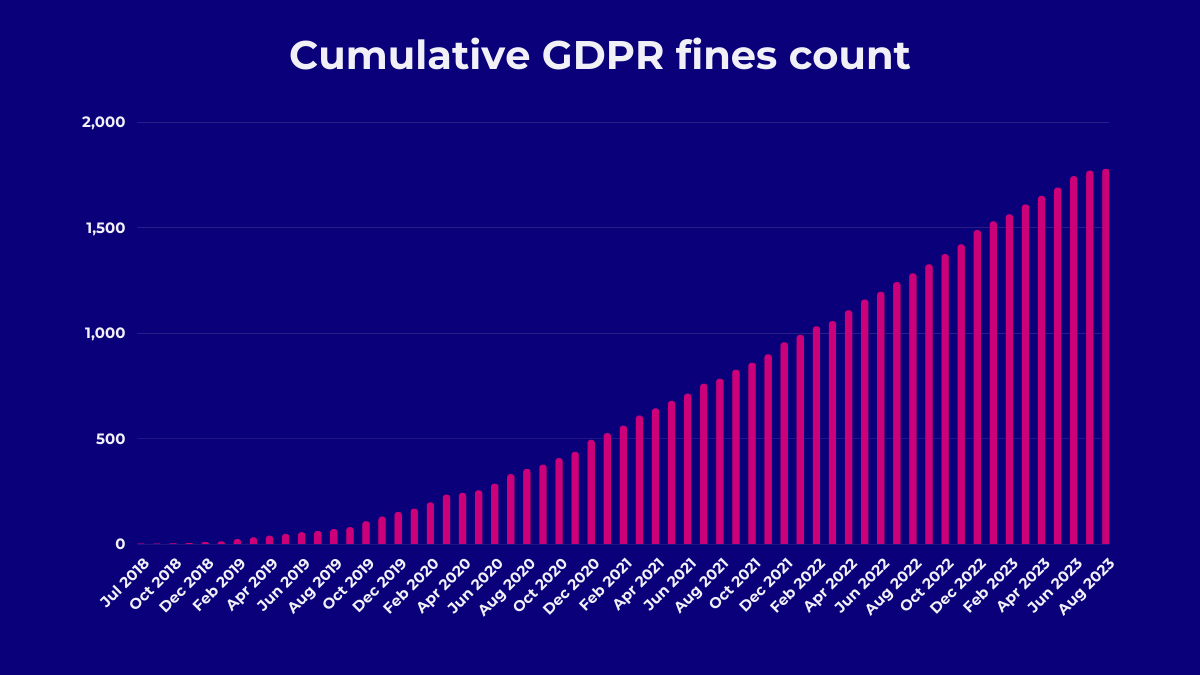

For most small businesses, the risk of a GDPR fine remains low. But as we’ll see, the frequency of GDPR fines is increasing.

And given the damage that an investigation under privacy and data protection law can cause, the risk is worth factoring into any company’s operations.

Do Fines Matter?

In the GDPR’s early days, the risk of large fines was arguably overblown.

While penalties for violating the GDPR can reach up to 4% of a company’s worldwide annual turnover or €20 million, there was no initial explosion in fines once the law took effect.

But as early cases resolve and regulators cut their teeth, monetary penalties are becoming more common. A January study from DLA Piper revealed a doubling in the value of penalties from 2021 to 2023.

Many headline GDPR cases—including a landmark €1.2 billion fine against Meta—have been resolved this year, having been delayed by years due to an enforcement “bottleneck” allegedly created by the Irish Data Protection Commission (DPC).

And a proposed amendment to the GDPR, intended to streamline enforcement processes among data protection authorities, could further increase the number of fines.

Certain data protection authorities have been active from the start.

For example, Spain’s regulator has issued at least 700 GDPR penalties, including many against small businesses. Italy has imposed nearly 300, along with non-monetary strategic enforcement against AI companies like OpenAI and Replika.

And there’s another increasingly important risk for many companies—privacy-related legal claims by individuals.

A January Norton Rose Fulbright report revealed that around one-third of the 430 respondents experienced cybersecurity, privacy, or data protection legal claims in 2023.

In Europe, the Collective Redress Directive means class action litigation could become more common and more effective.

Stricter Applications of the Law

Along with new laws and increased enforcement activity, many regulators have been taking a stricter approach.

Over the past few years, certain widespread online practices have been deemed illegal under data protection and privacy laws.

The US Federal Trade Commission (FTC)’s recent enforcement activity provides a good example of this trend.

Unlike the EU, the US lacks a comprehensive data protection law covering every type of business. As such, the FTC tackles privacy violations using a handful of older consumer protection and sector-specific laws.

This year, the FTC has brought a series of actions targeting the use of cookies and pixels. The first such case was brought against a discount prescription app provider, GoodRx.

Like the vast majority of websites and apps, GoodRx used Meta and Google’s advertising and analytics tools to track users’ behaviour and deliver targeted ads.

The FTC argued that GoodRx’s use of such tools violated the Health Breach Notification Rule, a 2009 law requiring certain companies to notify consumers about security breaches involving their health information.

The “health information” GoodRx collected included data such as mobile IDs and IP addresses. The “breach of security” involved disclosing such information to companies like Meta and Google without users’ consent.

This is an expansive reading of an ostensibly narrow law. But the FTC is not alone in its hardline legal interpretations.

When regulators and courts deliberate on the meaning of data protection and privacy law, they tend to be strict.

In the EU, from 2021-22, several data protection authorities investigated whether using Google Analytics broke the GDPR’s rules on transferring personal data outside of the EU. Almost universally, the regulators found that using the tool was illegal under the law in place at the time.

Even when companies applied Google Analytics’ strongest privacy settings and used the tool in non-sensitive contexts, they were breaking the law—according to the strict interpretation adopted by regulators.

The Industry Is Responding

Laws and regulatory decisions matter. However, the reality is that the web is dominated by a handful of powerful companies fuelled by the collection and processing of personal data. These companies’ business strategies have a huge impact on people’s privacy.

But the “tech giants” have finally started to change their practices.

Apple is the clearest example. The company’s App Tracking Transparency policy upturned digital markets in 2021 after iOS developers were forced to request users’ permission before tracking their activity across apps.

Even Google is betting big on “privacy”—or at least, is implementing its own interpretation of the concept. The company’s Privacy Sandbox (“technology for a more private web”) will reshape online advertising by changing how Chrome users receive targeted ads.

While the changes will make it harder for third parties to identify individuals via cookies, they will still allow Google to serve targeted ads—and provide the company with greater control over large parts of the web.

Pulling Up the Drawbridge

Big tech’s new direction might arguably be good for individuals, but it could be bad for smaller companies that rely on personal data.

By controlling the way third parties access personal data, like Apple and Google are building walls around their users to reinforce their market position.

For smaller players in the digital markets, big tech’s idea of “privacy” means one thing: Tech giants will increasingly act as the gatekeepers of people’s personal data.

The trade in personal data is no longer a “free for all”. Tech companies are making it harder for third parties to monetise information about their customers.

What These Changes Mean for Your Business

Like with tax and employment law, privacy is becoming a central and resource-intensive concern for most businesses.

During this transition toward a more private web, companies should consider whether their operations depend on the exploitation of personal data that might soon be beyond reach.

Embracing practices such as data minimisation and data protection by design can provide sustainable long-term growth, peace of mind, and improved trust.

No Cookie Banners. Resilient against AdBlockers.

Try Wide Angle Analytics!