Call it coincidence, call it foresight, but it’s interesting how recently employee monitoring, AI and “Bossware” have been all over the media. I wrote the first part of this two part series last month. In there we explored the various types of workplace surveillance and its intersection with machine learning, gamification, and algorithmic scoring. Since then I have seen these topics trending on Linkedin, and in the media. On the news, is where I learned that the Canadian province of Ontario just enacted an amendment to the labour code 1 that compels organizations with 25 or more employees to disclose any digital surveillance activities that these employees may be subject to. This makes Ontario the first Canadian province to protect workers’ digital privacy.2

This regulation, although a good first step, falls short of protections offered to workers in other jurisdictions such as the EU. The European Union does not have EU blanket regulations on employee surveillance per se, but the General Data Protection Regulation (GDPR) does include rules relating to employee data protection and use of personal data for the purpose of employee surveillance.

GDPR regulation highlight the following rules related to employee monitoring:

- Notifying the employees about the data collection procedures.

- Ensuring that employees have given their consent for personal data collection.

- Protecting all the data collected from unauthorized access or theft.

Some countries within the EU may have additional legal protections that confer yet stronger measures to prevent unlawful surveillance. In France, for example, the employer must abide by a three-step process in order to monitor its employees. First, the employer has to check with the Health and Safety Committee, the staff representatives, and the Works Council prior to executing any sort of monitoring. Afterwards the employer will need to file a statement to the data protection authority. Lastly, employees must be made aware of any attempts of monitoring, what data will be collected and receive their consent.3

Not surprisingly, Finland has some of the strictest anti-surveillance employment laws in the world.4 Finnish companies have very limited access to monitor emails, calls or computer usage, as these are protected under the privacy of communication under Finnish law, and must only collect information that is directly related to the performance of the job. Employers can, however, create a policy on best practices for employee usage of company equipment.5 This kind of foresight and thoughtful assessment of the impacts of these technologies may have on its population, is why Finland rates at or near the top of most human development, and freedom indexes.6 Another recent EU case that illustrates Europe’s hawkish, and in my opinion, correct stance on privacy rights in the workplace, comes from Spain. The data protection agency there, fined a company for illegally recording their employees, using hidden CCTV in the washrooms and locker rooms without disclosing that monitoring.7

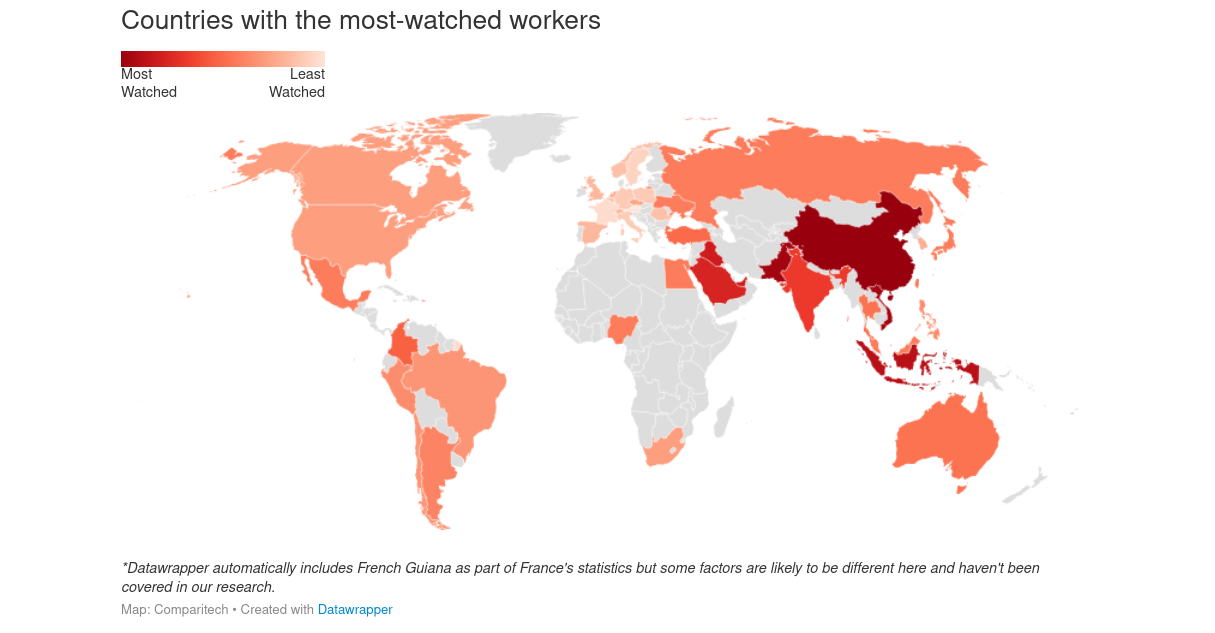

In most other jurisdictions, there are either no clear laws, or surveillance is permitted without the need to inform the person(s) being surveilled.

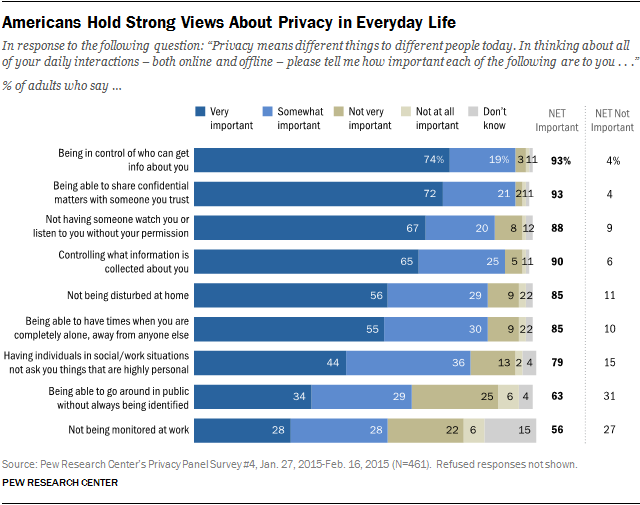

In most American states for example, companies have no limits on employee monitoring when using company equipment. However, they are not allowed to track personal emails.8 These permissive laws exist even though, a Pew Research study as far as 2015 showed that an overwhelming majority of Americans abhor being spied on9. Yet the laws are slow to change.

source 9

source 10

Still in North America, the topic of employee surveillance was featured on the Canadian Broadcasting Corporation (CBC) “The National” a weekday national news program that aired on Oct 14, 2022

In the news segment, the journalist was asking questions of 2 persons representing opposite sides of the Bossware debate.11 Representing the pro side, was a software development company that specializes in developing monitoring and surveillance software. Their argument centred on the premise that “data will set you free” as in you can never have too much data about your business operations, including your employees. Even Microsoft’s chairman and CEO Satya Nadella, has made remarks in support of data gathering and analysis. He argued that data will help resolve the work from home paradox12 that the majority of the west has experienced post COVID. What he was referring to, is the apparent disconnect between what managers, VS what employees, perceive in terms of how productive they are in a remote context. “Nadella also pointed out that there’s that paradox, and the best way to solve it is not with more dogma but with more data”13

Interestingly Mr. Nadella, also voices his opposition to what he calls “Spying on your employees” arguing that it does not provide actionable insights, and is only measuring “heat” not output. This while it is well known that many of the Microsoft communication products such as MS Teams and MS 365 contain features that do enable and facilitate “Spying”.14

Does the “you can never have enough data” expostulation hold water? One could argue that by collecting only anonymised and/or aggregated data, no harm is being done to individual privacy, but rather these aggregated data models allow organizations, and their associated AI enabled software to make better business and human resource decisions.

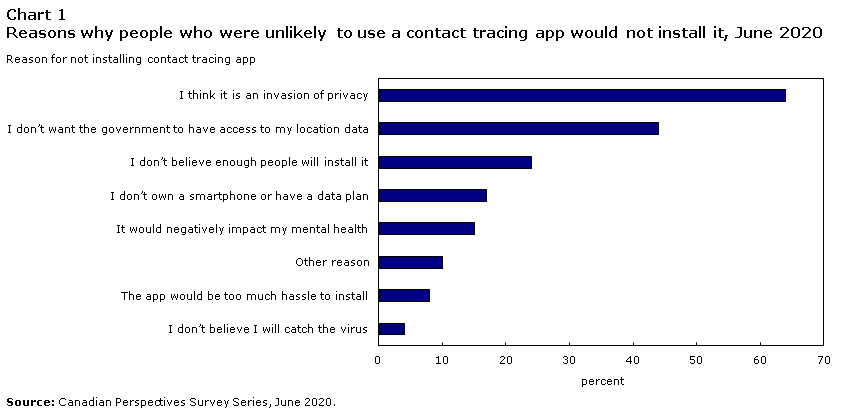

Governments as well, have tried to minimize the issue by calling it a bulk collection of communications, or using the COVID-19 pandemic as an excuse or cover, as the government of Canada did with their ill conceived and failed15 COVID Alert app16. The app failed because it was unable to garner a large enough install base to enable any useful function. No matter what it’s called or labeled, it is still by definition, mass surveillance. As I write this, a news story just broke on the National Post, in which the Canadian Public Health Agency (PHAC) has been caught illegally tracking the locations of 33 million Canadians, 85% of the population, through their cell phones without their knowledge or consent. They claim that because of the pandemic, they did not have time to inform the public.17 Canadians by and large are not comfortable with the government tracking their locations nor surveilling them, as evident in this survey conducted by StatsCan.

source 18

And to think the stories that manage to filter up into the media, is but the proverbial tip of the iceberg of the totality of mass surveillance taking place all around us. I shudder to imagine the unfathomable amounts of data being collected, stored and analyzed on each and everyone of us and the yet unknowable purpose this data may find.

All surveillance devices are designed to collect as much information as possible. They are created specifically to mine data, to exploit data, to draw conclusions from this data, and to try and create patterns from the information provided. Systems, for example, can be made specifically to filter out suspicious words and to determine relationships between “suspicious persons”.

As we discussed in part 1, mass surveillance interferes with privacy no matter how you spin it. The public must be vigilant to the government’s attempts to use real or manufactured public emergencies to conceal and if caught, justify mass surveillance of its citizens. We can not permit fear, and our natural desire for security to coerce us into abandoning our basic rights and freedoms. Respect for individual privacy and respect for individual freedoms, does not contradict technological or social advancement. Nor does it restrict the ability to innovate or conduct successful business within an ethical framework. Governments must be made to understand that spying on its citizens does not solve but create problems.

Alan Watts describes the oxymoron of the desire for security in the face of manufactured anxiety in his book (The Wisdom of Insecurity: A Message for an Age of Anxiety)

“To put it still more plainly: the desire for security and the feeling of insecurity are the same thing. To hold your breath is to lose your breath. A society based on the quest for security is nothing but a breath-retention contest in which everyone is as taut as a drum and as purple as a beet.”19

Mass Surveillance and AI’s Sinister Symbiosis

As AI systems continue to rapidly evolve, and diversify in application, their insatiable hunger for data also grows. In theory, the larger the dataset an AI system has access to, the better it gets at performing its task. Perhaps for the first time in human history, two potent technologies, Artificial Intelligence, and automated surveillance, have created an unholy alliance of sorts, a sinister symbiosis if you will.

Together they are more powerful than taken individually, a classic case of self enhancing software symbiosis. The one gathers and stores data, while the other takes advantage of this data to make better predictions, which in turn enhance the capabilities of the surveillance systems allowing them to gather even more data. For some of us, It’s hard to see the downside, let alone the perilousness of this self feeding loop.

The vast volumes of data being collected, the automatic nature of that collection and the algorithmic, and AI analysis of these massive troves of data, create, yet unknown and arguably unknowable consequences. Many AI systems make automated decisions that are opaque to even their creators.20 The more data an AI system has access to, the more information and relationships can be deduced from that data. The real prize is however, the predictive abilities of machine learning algorithms when allowed access to vast data stores. Indeed, the ability to predict and preempt “problems” from happening, is the metaphorical holy grail of artificial intelligence. Already today, these predictive powers are being utilized in traffic management, supply chain, retail, healthcare and meteorology and many other sectors.21

Now let’s imagine the widespread and indiscriminate application of this technology in security and policing for example. This starts to create dangers to civil liberties, due process, and the presumption of innocence in our legal system. Will an AI now predict who is likely to reoffend, and therefore this person must now be labeled as such? Do they now lose the right of appeal, or parole because the AI has determined them to be unrehabilitatable? Perhaps AI will preemptively suggest the arrest of people before they commit a crime because its analysis shows that they were about to. The book Minority Report by Philip K. Dick, comes to mind. Or will we now depend on AI to investigate and prosecute “thoughtcrimes” al la 1984.

In the book, 1984, George Orwell described a two-way television that gave the government visual and audio access to the homes and work offices of all its citizens. In the case of prisoners, they would always be reminded that they were being watched. Orwell reasoned that by creating total and complete surveillance, and never disclosing the methods to those being subjected to it, then citizens would just assume that they are constantly being watched no matter where they are, and therefore would discourage crimes from taking place to begin with.

Healthcare is another domain where AI decision making and associated predictive analysis have the potential to commit ethical crimes. Imagine the ramifications, when an AI system is making decisions on life expectancy, transplant list prioritization, cancer care and other patient care areas. Do we really want to live in a society where important life and death decisions are made without input of human compassion and mercy?

In the employment and human resource sector is where the harm from AI decision making is currently the greatest. Those pitfalls are scattered all over the employment sector as I covered extensively in part 1 of this series.

AI Art, and Intellectual Property

Not all AI systems rely on surveillance acquired data to perform their jobs. Some, like the so-called AI art generators, get their data elsewhere. These have recently flooded the internet showing off their “amazing” abilities to take user prompts and synthesize them into complex pieces of “art”.

Calling these outputs art is misleading at best, and at worse harmful to our understanding of what art really is. An expression of human creativity. The problem with calling it art is that art is about evoking feeling, and the telling of a story. Art is a deep expression of human emotion. A different and beautiful way of communicating ideas, and feelings.

The AI generated images, on the other hand, are created through a procedural, rational, predetermined process that produces randomized outputs based on natural language processing. What is being output essentially is an algorithmic synthesis of the analysis of billions of images on the internet in response to the human provided text prompts.

I can see the potential for this kind of image generation to find application within the advertising, marketing and other creative industries. A human designer may be able to use some of these outputs as building blocks of larger work structures containing various other forms of input.

However, questions about potential intellectual property issues are surfacing and becoming louder each day. What happens when a company decides to start selling AI generated images? Will the original creators, whose images or artwork were originally swallowed by the algorithm, be entitled to compensation?22 If a designer uses an AI generated image as part of an advertising campaign for example, would the advertiser then be on the hook for royalties to the original artist whose art the AI “ripped off” erm utilized in the generated image? These and other queries remain to be answered.

That being said, there is room for these technologies to mature and become more useful with time. We must address the thorny issue of intellectual property in relation to AI generated images. We must also put in place laws that prohibit the use of these technologies for harm. Unfortunately, similar types of tech, such as deepfakes, are already being wielded for harm. Particularly in the porn industry, and politics. The potential for abuse and the danger these technologies pose to our societies can not be overstated. As grand geopolitical struggles are evolving, and the information wars rage, these tools are finding themselves increasingly being utilized in the spread of propaganda and disinformation.

AI Copy Generation, is it Plagiarism?

Another interesting proliferation of AI technology has been in the field of content generation and copywriting. These AI enabled text generators, promise to auto generate blogs, and marketing content, articles, and more. In my limited experience testing them, I found them to be repetitive, predictable, and uninspired for the most part. Even Google has classified them as “spam”.

As these develop further, one does wonder, will we, in the near future, witness AI “authors” writing books that make it into the New York Times bestseller list? Sure If literature is defined as a pleasing arrangement of words, then it is mathematically possible to create a work of fine literature if you had access to an infinite amount of monkeys typing on an infinite amount of typewriters for an infinite amount of time. As a matter of fact, this type of machine generated library, of every book ever written and every book that will ever be written already exists. It’s called Library of Babel, a project that describes itself as “a place for scholars to do research, for artists and writers to seek inspiration, for anyone with curiosity or a sense of humor to reflect on the weirdness of existence”.23

That’s not what literature is however, nor is that how the AI writing tools work. For a piece of writing to be considered literature it must cross into the highly subjective realm of art. Humans alone are endowed with the faculties to determine when some text meets the definition of literature.

These writing tools work by crawling the internet and ingesting great amounts of writings, articles, books and any snippet of text it can find. Then using algorithms, natural language processing, and deep neural networks to analyze data about a particular topic or keywords that are fed into it. After analyzing the data, the AI program processes it and applies grammar, sentence structure, punctuation and other pre programmed language rules.24 The output, although human readable, often reads contrived, mechanical and repetitive.

Scrutiny of AI Decision Making

The human brain is not a computer. Nor is memory akin to RAM. The information processing (IP) metaphor of the human brain is not accurate, nor helpful in understanding the nature of the human intellect. Humans don’t make decisions based on the IP model but rather a much more complex biochemical symphony, not yet fully understood.25 Can we ever be sure that we can make sense of every AI system’s decision? AI systems today make high-stakes decisions about our lives, jobs, health, homes, and societies. Yet we do not see robust auditing frameworks being put in place to scrutinize those decisions, and ensure they are not causing harm.

A handful of regulators have started to catch up, and are now demanding specific AI auditing frameworks be put in place. The challenge is that we do not know how to audit a decision making process that we do not fully understand. Studies have shown that AI decision making is sensitive to bias, and it’s difficult to determine the root cause of that bias. For example, in one 2018 study on facial recognition it was found that the AI had a harder time distinguishing faces of darker skinned people than those of white people.26 Consider that those AI systems themselves are built in an imperfect environment by imperfect humans, ingesting imperfect and biased data. Whether you look at the criminal justice system, or housing, to employment, to our banking systems, discrimination is often baked into the outcomes the software is tasked to predict. The artificial intelligence industry’s lack of representation of people who understand and can work to address the potential harms of these technologies only exacerbates this problem. There is plenty of substantiation of the potential for AI to cause discriminatory harm, especially to already marginalized groups.27

Machine decision making must be made transparent and allow for direct human oversight, auditing, and scrutiny. Companies that use AI in decision making should be forced to disclose that, and be subject to public audits. Only then can we ensure the safety, fairness, and most importantly the humanity of those automated decisions. A sober reflection on whether AI systems should be permitted to make certain decisions at all, is also sorely needed.

Conclusion

It is indeed a fascinating time in history to be alive. The rate of technological advancements in artificial intelligence, and machine learning over the past few years has been breathtaking. The global pandemic upended our established cultural, and social norms, which kicked off a collective introspective assessment of the value of time and our collective freedoms. At the individual level, people are reassessing their relationship with work, and material needs. Remote work has become widely acceptable, even expected by many, which has facilitated the migration of thousands, from urban to rural areas, and in some cases to other countries.

This has created both challenges for management to understand the productivity of remote workers, and demand for monitoring software to spy on said workers and ensure their “productivity”. The “normalization” of surveillance continues into the public space, and is increasingly, also being employed by governments and local authorities to gather exabytes of data on all its citizens. This data is then being made available to rapidly evolving AI and machine learning algorithms that weave and interconnect it into a fabric of information, allowing for yet unrealized causalities to be discovered, analyzed and ultimately used to make automated decisions that affect the entirety of our lives, for better or worse.

The hasty expansion, and normalization of mass surveillance, algorithmic decision making and the utilization of these; to advance corporate and state control, is a tragic yet predictable result of our own worst instincts. Those very instincts have in a few short years polarized our societies in never before seen fashion enabled and amplified by technology.

I submit that there is an inverse qualitative relationship between surveillance enabled AI decision making and our freedoms.

Let us never forget that freedom’s red door is, but with bloodied fists beaten.

Footnotes

- https://www.cbc.ca/radio/thecurrent/how-the-working-for-workers-act-could-empower-employees-in-ontario-1.6614498 ↩

- https://financialpost.com/fp-work/ontario-pledges-to-become-first-province-to-protect-workers-from-digital-spying-by-bosses ↩

- https://www.replicon.com/monitoring-of-employees-a-global-regulatory-perspective/ ↩

- https://tem.fi/en/protection-of-privacy-at-work ↩

- https://www.insightful.io/blog/employee-monitoring-legal ↩

- https://freedomhouse.org/countries/freedom-world/scores?sort=desc&order=Civil%20Liberties ↩

- https://www.dataguidance.com/news/spain-aepd-fines-muxers-concept-20000-processing ↩

- https://www.insightful.io/blog/employee-monitoring-legal ↩

- https://www.pewresearch.org/internet/2015/05/20/americans-views-about-data-collection-and-security/ ↩ ↩2

- https://www.comparitech.com/blog/vpn-privacy/employee-monitoring-statistics/ ↩

- https://www.youtube.com/watch?v=f8FBjsi-svI ↩

- https://www.bnnbloomberg.ca/don-t-spy-on-employees-to-ensure-they-re-working-microsoft-says-1.1822238 ↩

- https://content.techgig.com/hiring/satya-nadella-outlines-the-paradox-of-the-argument-over-remote-work/articleshow/94936970.cms ↩

- https://ifex.org/office-365-and-working-from-home-is-your-boss-spying-on-you/ ↩

- https://ised-isde.canada.ca/site/ised/en/advisory-council/member-biographies/final-report-covid-alert-public-health-tool#2 ↩

- https://www.priv.gc.ca/en/privacy-topics/health-genetic-and-other-body-information/health-emergencies/rev_covid-app/ ↩

- https://nationalpost.com/news/canada/canadas-public-health-agency-admits-it-tracked-33-million-mobile-devices-during-lockdown ↩

- https://www150.statcan.gc.ca/n1/pub/45-28-0001/2020001/article/00059-eng.htm ↩

- https://www.goodreads.com/book/show/551520.The_Wisdom_of_Insecurity ↩

- https://www.interceptinghorizons.com/post/the-black-box-problem-when-ai-makes-decisions-that-no-human-can-explain ↩

- https://venturebeat.com/ai/the-predictive-powers-of-ai-could-make-human-forecasters-obsolete/ ↩

- https://www.hklaw.com/en/insights/publications/2022/04/ai-is-improving-its-artistic-skills-but-who-owns-its-output ↩

- https://libraryofbabel.info/About.html ↩

- https://www.forbes.com/sites/forbescoachescouncil/2022/06/24/ai-writing-assistants-are-they-worth-using-in-2022/?sh=4505eb0d3d30 ↩

- https://aeon.co/essays/your-brain-does-not-process-information-and-it-is-not-a-computer ↩

- https://www.technologyreview.com/2022/10/24/1062071/do-ai-systems-need-to-come-with-safety-warnings/ ↩

- https://www.aclu.org/news/privacy-technology/how-artificial-intelligence-can-deepen-racial-and-economic-inequities ↩