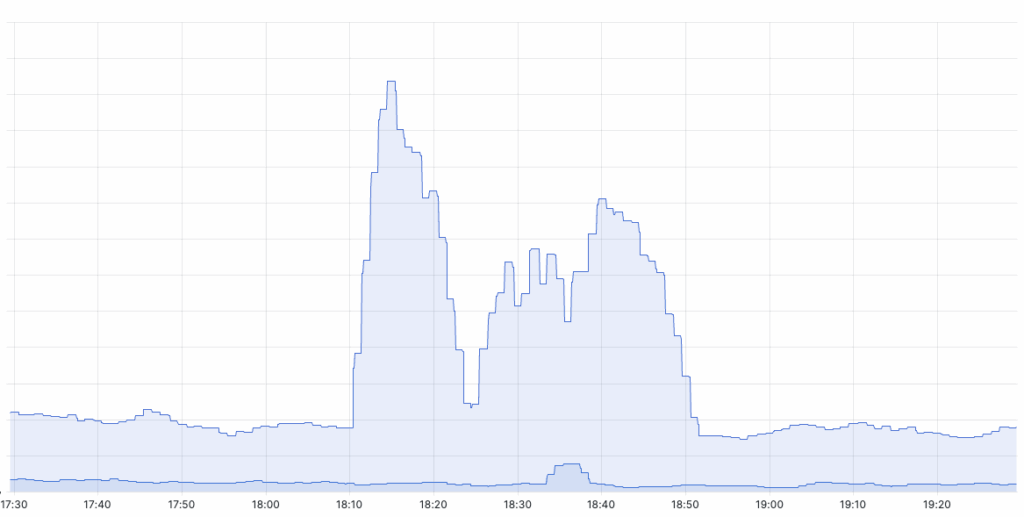

Web analytics is a tricky business. Your customers’ problems are your problems – nowhere is this clearer than when a DDoS attack or an overzealous crawler comes knocking. At first, the traffic looks normal: requests flood in, metrics tick upward, and everything seems fine. But then the pattern emerges – something off. Pages reload at machine precision. Sessions stretch into the hundreds. A single “user” generates more events than an entire marketing campaign.

You can’t just throttle the traffic. Mixed in with the noise are real people, and our OVH DDoS protection – rightfully – doesn’t bat an eye. This isn’t a network layer abuse – it’s an application layer issue.

Today was our turn

Our infrastructure normally chews through thousands of events per minute without breaking a sweat. Yes, bots are always there (no, your vendor’s “100% bot-free” guarantee is a lie), but the signal usually drowns out the noise. Then came this crawler. It didn’t just scrape a site – it obsessively re-scraped one with thousands of pages, hammering the same URLs again and again, as if stuck in a loop. The result? A firehose of data that clogged our aggregation pipeline to no end.

We weren’t defenceless. We’d already set limits on events per session – but only for aggregations (bounce rates, session durations, etc.). The raw events? Those kept flowing, unchecked. Today, the crawler hit that gap.

The fix? A two-part defence, deployed in hours:

- Statistical tripwires: We analyse intra-session behaviour. Consecutive visits with too perfect timing? Visits per minute exceeding human reflexes? Flagged.

- Surgical exclusion: Bot-marked sessions now vanish from stats entirely – no skewing averages, no bloating reports. The raw, hashed events stay archived, though, because we’re paranoid (ie: prepared). If a customer ever cries foul, we can replay the data like a VCR tape.

Will it hurt customers?

Unlikely. The thresholds are so high that hitting them requires either:

- A human with caffeine IV drip and a vendetta, or

- A website misconfigured to spam its own analytics.

Is it perfect?

Nope. Edge cases exist. False positives could theoretically slip through. But here’s the thing about scaling web analytics: you optimise for the 99.9%, and you take refuge it large numbers.

Perfect is the enemy of good. And today? Good meant the pipeline stayed up and the dashboards stayed accurate.

No Cookie Banners. Resilient against AdBlockers.

Try Wide Angle Analytics today!